One of the fundamental assumptions underlying the standard model of cosmology, commonly known as the Big Bang, is that the Cosmos comprises a unified, coherent and simultaneous entity – a Universe. It is further assumed that this “Universe” can be mathematically approximated under that unitary assumption using a gravitational model, General Relativity, that was devised in the context of our solar system. At the time that assumption was first adopted the known scale of the Cosmos was that of the Milky Way, our home galaxy, which is orders of magnitude larger and more complex than the solar system.

Subsequently as the observed Cosmos extended out to the current 13 billion light year range, it has become clear that the Cosmos is orders of magnitude larger and more complex than our galaxy. The resulting Big Bang model has become, as a consequence, absurd in its depiction of a cosmogenesis and ludicrous in its depiction of the “current state of the “Universe“, as the model attempts to reconcile itself with the observed facts of existence.

It will be argued here that the unitary conception of the Cosmos was at its inception and is now, as illogical as it is incorrect.

I Relativity Theory

The unitary assumption was first adopted by the mathematician Alexander Friedmann a century ago as a simplification employed to solve the field equations of General Relativity. It imposed a “universal” metric or frame on the Cosmos. This was an illogical or oxymoronic exercise because a universal frame does not exist in the context of Relativity Theory. GR is a relativistic theory because it does not have a universal frame.

There is, of course, an official justification for invoking a universal frame in a relativistic context. Here it is from a recent Sabine Hossenfelder video:

In general relativity, matter, or all kinds of energy really, affect the geometry of space and time. And so, in the presence of matter the universe indeed gets a preferred direction of expansion. And you can be in rest with the universe. This state of rest is usually called the “co-moving frame”, so that’s the reference frame that moves with the universe. This doesn’t disagree with Einstein at all.

The logic here is strained to the point of meaninglessness; it is another example of the tendency of mathematicists to engage in circular reasoning. First we assume a Universe then we assert that the universal frame which follows of necessity must exist and therefore the unitary assumption is correct!

This universal frame of the BB is said to be co-moving and therefore everything is supposedly OK with Einstein (meaning General Relativity, I guess) too, despite the fact that Einstein would not have agreed with Hossenfelder’s first sentence; he did not believe that General Relativity geometrized gravity, nor did he believe in a causally interacting spacetime. The universal frame of the BB model is indeed co-moving in the sense that it is expanding universally (in the model). That doesn’t make it a non-universal frame, just an expanding one. GR does not have a universal frame, co-moving or not.

Slapping a non-relativistic frame on GR was fundamentally illogical, akin to shoving a square peg into a round hole and insisting the fit is perfect. The result though speaks for itself. The Big Bang model is ludicrous and absurd because the unitary assumption is wrong.

II The Speed of Light

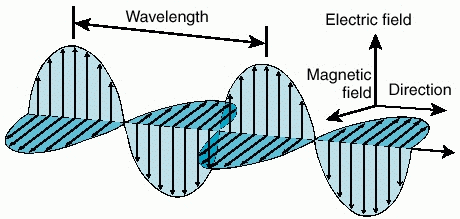

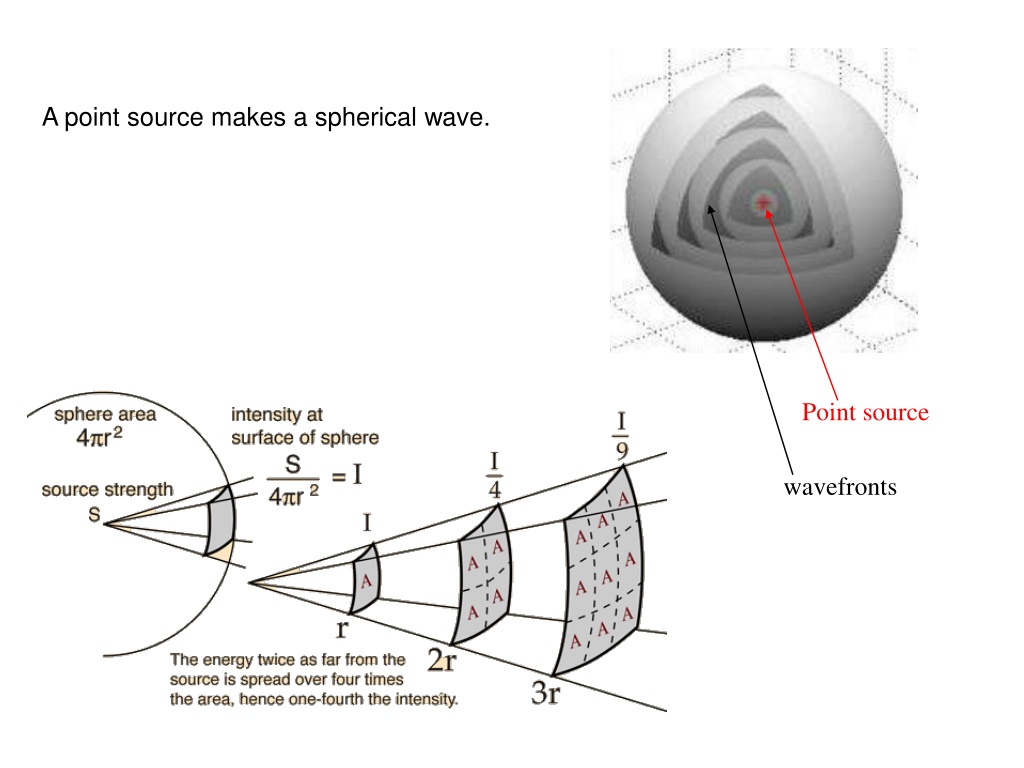

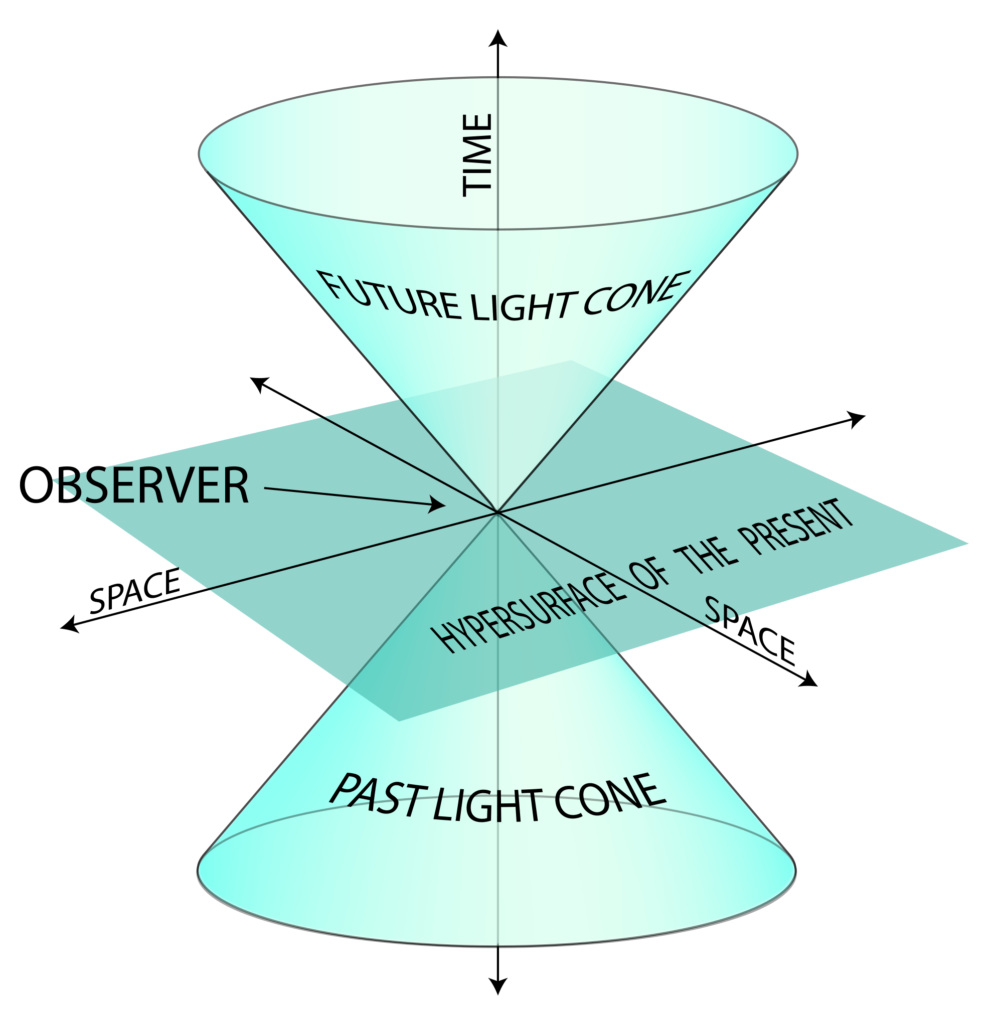

The speed of light in the Cosmos has a theoretical maximum limit of approximately 3×108 meters per second in inertial and near-initial conditions. The nearest star to our own is approximately 4 light years away, the nearest galaxy is 2.5 million LY away. The furthest observed galaxy is 13.4 billion LY.* This means that our current information about Proxima Centauri is 4 years out of date, for Andromeda it is 2.5 million years out of date, and for the most distant galaxy 13.4 billion years out of date.

Despite this hard limit to our information about the Cosmos, the BB model leads cosmologists to perceive themselves capable of making grandiose claims, unverifiable of course, about the current state of the Cosmos they like to call a Universe. In the BB model’s Universe it is easy to speak of the model Universe’s current state but this is just another example of the way the BB model does not accurately depict the Cosmos we observe.

In reality we cannot have and therefore, do not have, information about a wholly imaginary global condition of the Cosmos. Indeed, the Cosmos cannot contain such knowledge.

Modern cosmologists are given to believing they can have knowledge of things that have no physical meaning because they believe their mathematical model is capable of knowing unknowable things. That belief is characteristic of the deluded pseudo-philosophy known as mathematicism; it is a fundamentally unscientific conceit.

III The Cosmological Redshift

The second foundational assumption of the BB model, is that the observed redshift-distance relationship found (or at least confirmed) by the astronomer Edwin Hubble in the late 1920s was caused by some form of recessional velocity. Indeed, it is commonly stated that Hubble discovered that the Universe is expanding. It is a matter of historical record, however, that Hubble himself did not ever completely agree with that view:

To the very end of his writings he maintained this position, favouring (or at the very least keeping open) the model where no true expansion exists, and therefore that the redshift “represents a hitherto unrecognized principle of nature”.

Allan Sandage

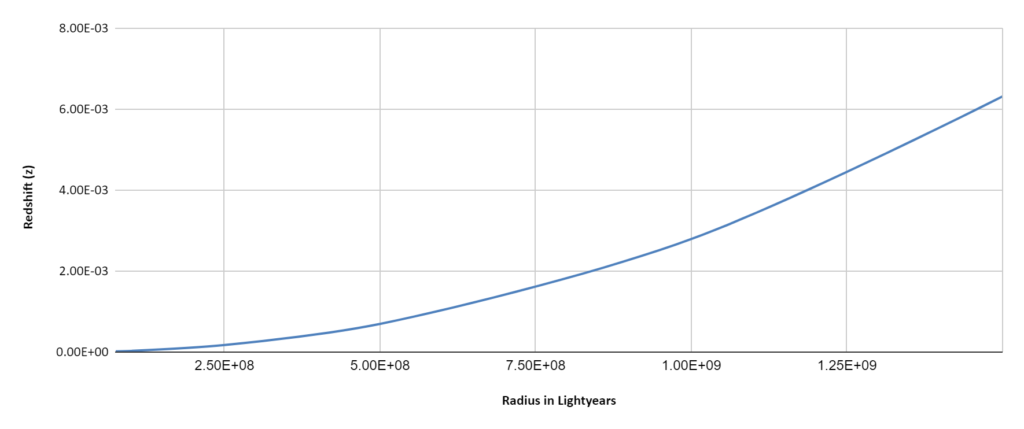

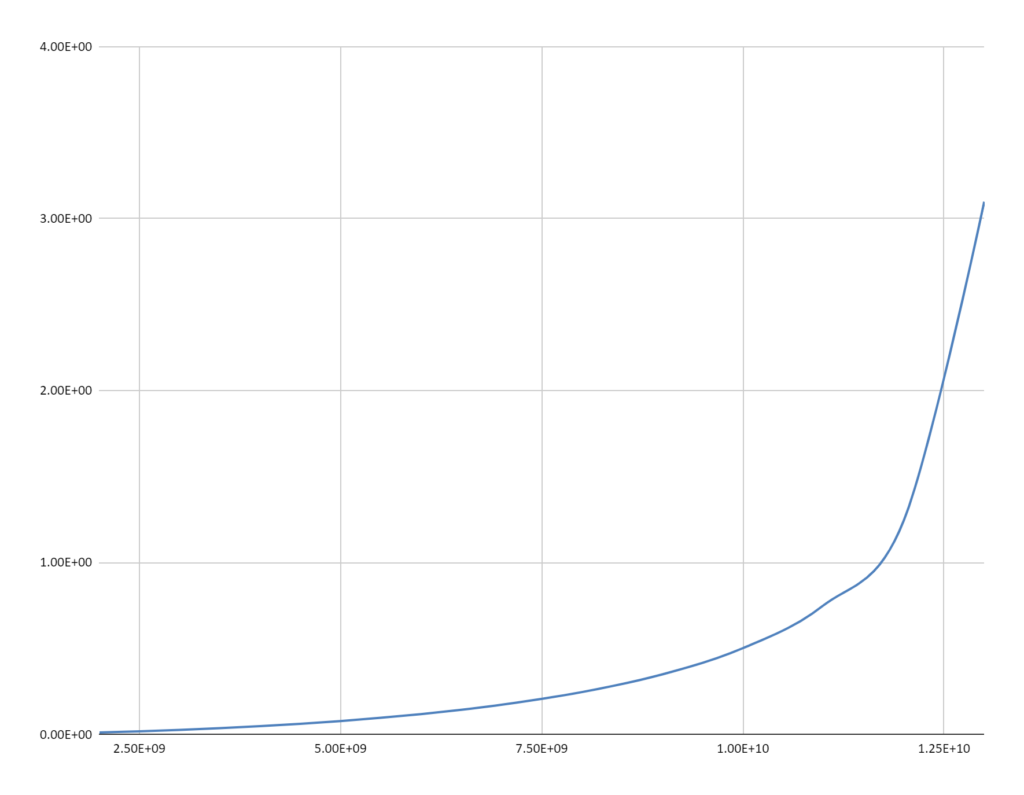

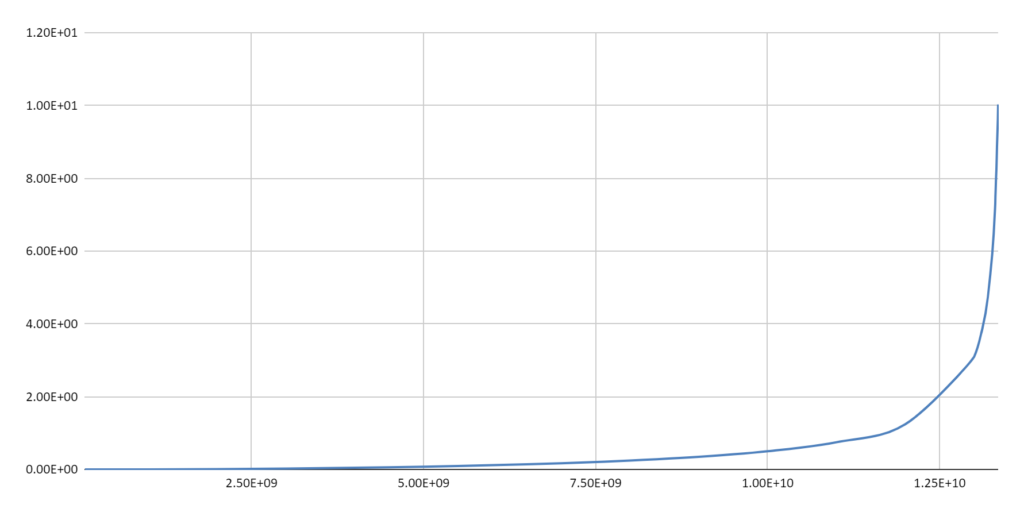

However, for the purposes of this discussion, the specific cause of the cosmological redshift does not matter. The redshift-distance relation implies that if the Cosmos is of sufficient size there will always be galaxies sufficiently distant that they will lie beyond the observational range of any local observer. Light from those most distant sources will be extinguished by the redshift before reaching any observer beyond the redshift limited range of the source. Even in the context of the BB, it is generally acknowledged that the field of galaxies extends beyond the potentially observable range.

The extent of the Cosmos, therefore, is currently unknown and, to three dimensionally localized observers such as ourselves, inherently unknowable. A model, such as the BB, that purports to encompass the unknowable is fundamentally unscientific; it is, by its very nature, only a metaphysical construct unrelated to empirical reality.

IV The Cosmos – A Relativistic POV

Given the foregoing considerations it would seem reasonable to dismiss the unitary conception of the Cosmos underlying the Big Bang model. The question then arises, how should we think of the Cosmos?

Unlike scientists of 100 years ago when it was an open debate whether or not the nearby galaxies were a part of our galaxy, modern cosmologists have a wealth of data stretching out now to 13 billion light years. The quality and depth of that data falls off the farther out we observe however.

The Cosmos looks more or less the same in all directions, but it appears to be neither homogenous nor isotropic nor of a determinable, finite size. That is our view of the Cosmos from here on Earth; it is our point of view.

This geocentric POV is uniquely our own and determines our unique view of the Cosmos; it is a view that belongs solely to us. Observers similarly located in a galaxy 5 billion light years distant from us would see a similar but different Cosmos. Assuming similar technology, looking in a direction opposite the Milky Way the distant observers would find in their cosmological POV a vast number of galaxies that lie outside our own POV. In looking back in our direction, the distant observer would not see many of the galaxies that lie within our cosmological POV.

It is only in this sense of our geocentric POV that we can speak of our universe. The contents of our universe do not comprise a physically unified, coherent and simultaneously existing physical entity. The contents of our universe in their entirety are unified only by our locally-based observations of those contents.

The individual galactic components of our universe each lie at the center of their own local POV universe. Nearby galaxies would have POV universes that have a large overlap with our own. The universes of the most distant observable galaxies would overlap less than half of our universe. Those observers most distant and in opposite directions in our universe would not exist in each others POV.

So what then can we say of the Cosmos? Essentially it is a human conceptual aggregation of all the non-simultaneously reviewable matter-energy systems we call galaxies. The field of galaxies extends omni-directionally beyond the range of observation for any given three dimensionally localized observer and the Cosmos is therefore neither simultaneously accessible nor knowable. The Cosmos does not have a universal frame or a universal clock ticking. As for all 3D observers, the Cosmos tails away from us into a fog of mystery, uncontained by space, time, or the human imagination. We are of the Cosmos but can not know it in totality because it does not exist on those terms.

To those who might find this cosmological POV existentially unsettling it can only be said that human philosophical musings are irrelevant to physical reality; the Cosmos contains us, we do not contain the Cosmos. This is what the Cosmos looks like when the theoretical blinders of the Big Bang model are removed and we adopt the scientific method of studying the things observed in the context of the knowledge of empirical reality that has already been painstakingly bootstrapped over the centuries by following just that method.

___________________________________________________

* In the funhouse mirror of the Big Bang belief system, this 13.4 GLY distance isn’t really a distance, it’s the time/distance the light traveled to reach us. At the time it was emitted according to the BB model the galaxy was only 2.6 GLY distant but is “now” 32 GLY away. This “now“, of course, implies a “universal simultaneity” which Relativity Theory prohibits. In the non-expanding Cosmos we actually inhabit, the 13.4 GLY is where GN-z11 was when the light was emitted (if our understanding of the redshift-distance relation holds at that scale.) Where it is “now” is not a scientifically meaningful question because it is not an observable and there is. in the context of GR, no scientific meaning to the concept of a “now” that encompasses ourselves and such a widely separated object.